gengaze (2025)

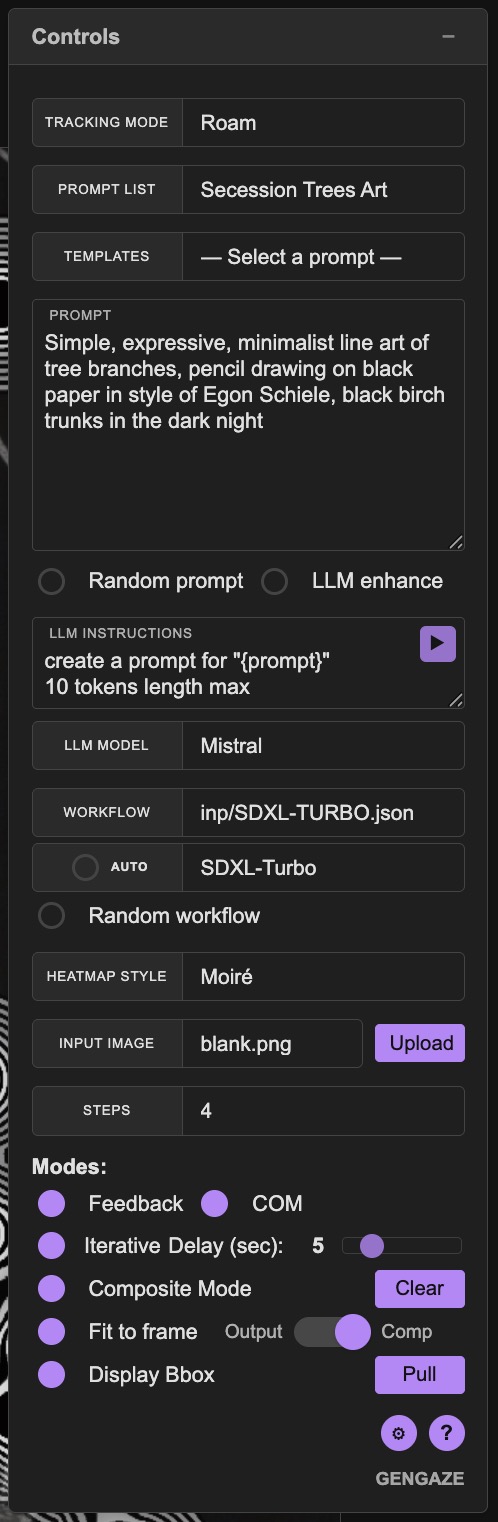

Started as part of a broader exploration into vibe-coding, GenGaze is a browser-based system for generating images based on patterns of attention. Developed as a modular, self-contained tool for experimentation and hybrid control, it (currently) supports four input types: gaze tracking, hand tracking, saliency prediction, and randomised roaming. Each input method produces a stream of coordinates that accumulate into a heatmap.

Depending on the selected mode, GenGaze uses this data to run any combination of automated takes on three foundational generative AI workflows. Standard applies an image-to-image transformation comping the input and the heatmap. Inpainting regenerates specific zones defined by the heatmap inside the current frame. Outpainting crops around the densest area in the heatmap and expands the image outward.

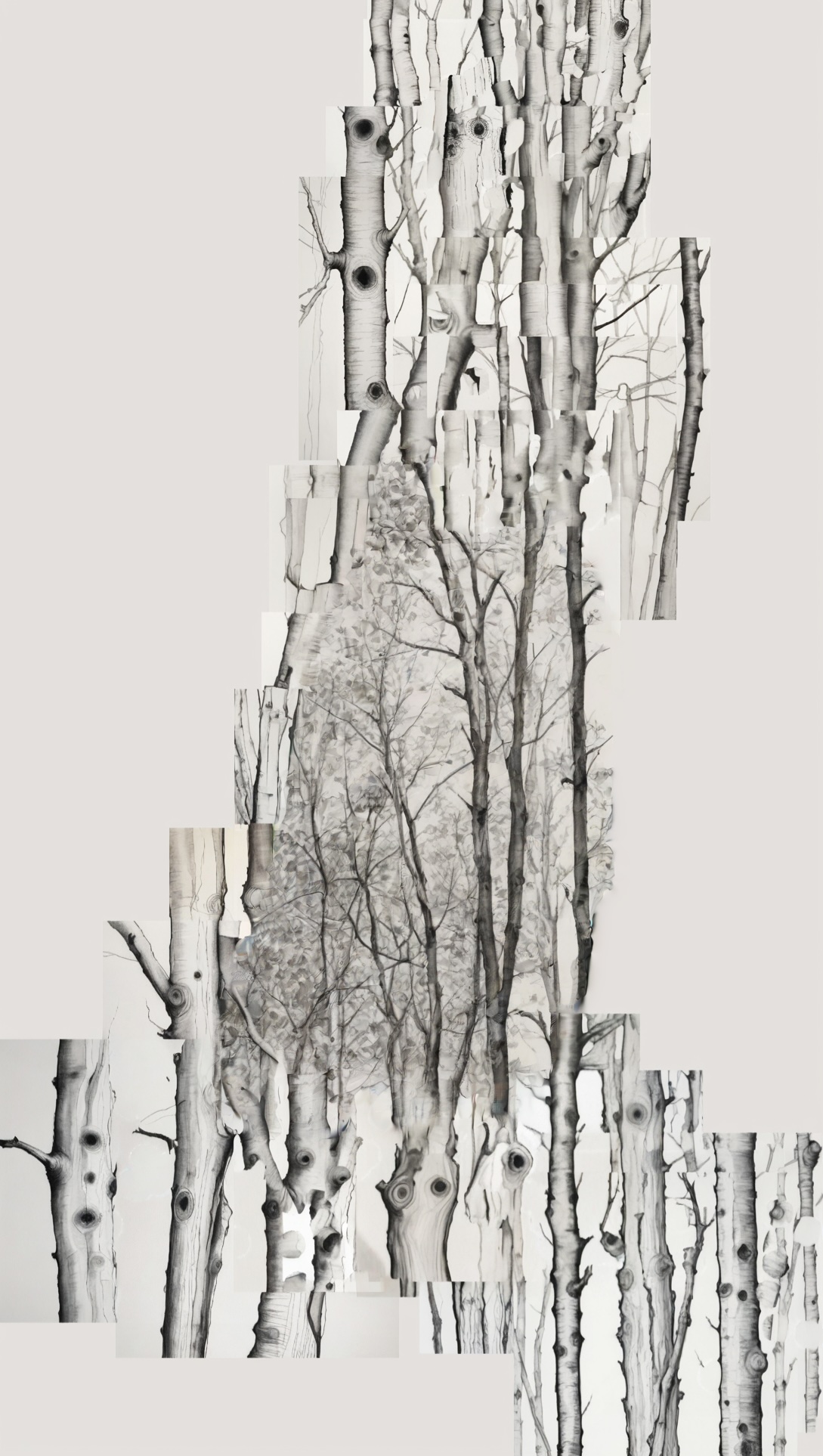

In addition, GenGaze includes iterative and composite modes that extend the system’s creative capabilities. Iterative mode automates generation at set intervals, while composite mode builds an expanding canvas by placing multiple outputs side by side based on attention data.

All images are generated using local ComfyUI workflows and displayed in real time. The interface includes a draggable control panel, pan/zoom navigation, and multiple heatmap styles.

The question raised by GenGaze’s exploration of the limits of automation in the creative process is ultimately one of authorship and perceptual input. It integrates live or simulated tracking data directly into the generation process, so that where and how input accumulates materially affects the result. Rather than illustrating intent, the images function as traces of movement and weighting—structured outputs shaped by behavioural input. In this way, the system links visual form to the evolving dynamics of its own operation

The app runs on demand at https://gnz.grebenshyo.xyz/ (DM me for access).